Much of cognitive neuroscience as well as traditional cognitive science is engaged in a quest for mechanisms through a project of decomposition and localization of cognitive functions. Some advocates of the emerging dynamical systems approach to cognition construe it as in opposition to the attempt to decompose and localize functions. I argue that this case is not established and rather explore how dynamical systems tools can be used to analyze and model cognitive functions without abandoning the use of decomposition and localization to understand mechanisms of cognition.

Increasingly cognitive scientists are finding it very useful to employ a dynamical perspective in modeling cognitive systems (Port and van Gelder, 1995). A dynamical perspective is attractive for a number of reasons. To identify just three, it provides a way of modeling the highly interactive nature of the mind/brain, it takes seriously the fact that cognitive processes unfold in time, and it makes it easy to integrate the internal processes of cognition with features of the environment, which are themselves changing in the course of cognitive activity. I do not question the value of the dynamical approach itself, but I do question one of the ways advocates of dynamical systems approaches seek to contrast their approach with more traditional approaches in cognitive science that are proving increasingly important in cognitive neuroscience. These advocates contrast dynamical approaches with mechanistic approaches to cognition--the attempt to identify components or modules within cognitive systems and to construe cognitive processes as involving the interaction of these modules. This challenge construes a dynamics approach as advocating a radical holism. I will argue against such holistic construals of the role of dynamical analysis within cognitive science and contend that instead the dynamical approach ought to be conceived of as compatible with a weak modularity.

To make the case that a dynamical conception of cognition is compatible with mechanistic models, I begin with a brief account of mechanistic explanation. I then turn to two sets of dynamicist arguments against mechanistic models, due respectively to Timothy van Gelder and Guy van Orden, and propose answers to both challenges. Lastly, I briefly present a case in which dynamical tools are likely to be extremely important in the process of developing a mechanistic explanation.

1. Mechanistic Explanation Through Decomposition and Localization

One of the claims that advocates of dynamical models of cognition often make is that dynamical models have proven extremely powerful in providing a framework for explaining a host of physical phenomena (van Gelder, 1995). A similar claim, though, can be made about mechanistic models in biology. What makes a model mechanistic, as I am using the term, is that it identifies components in a system and attributes to these components responsibility for performing specific tasks for the system. Mechanistic models have proven powerful in providing explanations of a wide variety of phenomena such as basic metabolism and genetics (both Mendelian and molecular). In Discovering Complexity Robert Richardson and I focus on the process by which scientists develop such models through a process we call decomposition and localization. Decomposition involves identifying component tasks that need to be performed in the system in order to accomplish the overall activities of the system, while localization involves identifying (or providing evidence for) actual components in the system that carry out these tasks. We trace out a common path through which scientists develop such explanations. Often scientists begin very simply, attributing an overall activity of the system to a single component. We label this endeavor simple or direct localization. In cognition, Broca's (1861) attribution of articulate speech to an area in frontal cortex represents a proposed simple localization. A major virtue of starting with such a simple account is that new evidence is often able to make clear its shortcomings and provide evidence of how to revise it. Such revision frequently involves identifying multiple components, each performing only a component of the overall activity. This gives rise to what we call complex localization. Many researchers took Broca to have localized language function more generally, but Wernicke's (1874) research, developed within the perspective of an associationist psychology, identified a variety of language related tasks and localized them in different areas. Complex localizations assume a linear feedforward processing system, but frequently researchers begin to discover feedback processing in the system as well. Such feedback, as the cyberneticists made clear (Wiener, 1948), provides a means whereby the overall system can self-regulate its activity in the face of an often changing environment. Richardson and I speak of such models as integrated systems.

The fact that mechanistic research often leads to models of integrated systems will become important below when we consider the dynamicist challenge to mechanist explanations that assume decomposition or modularity. For now, though, it is sufficient to note one important aspect of such mechanistic accounts: In identifying components, the components themselves are only assumed to have first-order independence so that we can say what activities each carries out. They are recognized to be interconnected, and these interactions relate the components so that one component can modulate the activity of others. As a result, these components are not Fodorian (Fodor, 1983) modules!

2. van Gelder's Argument for Rejecting Homuncularity

The components isolated in a mechanistic model have been characterized by Dennett (1977) as homunculi--little agents each responsible for one operation in the overall activity of the system. In his presentation of the potential of the dynamics approach to radically alter cognitive science, van Gelder associates homuncularity with representation, computation, sequential and cyclic operation: "a device with any one of them will standardly posses others" (1995, p.351). He then proceeds to contrast an approach to cognition that assumes these features with a dynamical approach. Here I will focus only on van Gelder's denial of homuncularity to dynamical models (in Bechtel, in press, I examine and criticize his denial of representations to dynamical models).

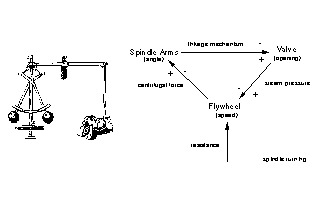

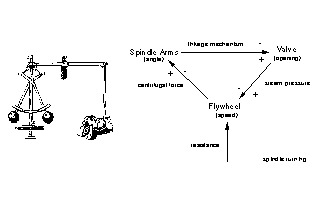

To make his account concrete, van Gelder (1995) compares two models

for regulating a steam engine: the Watt centrifugal governor and a computational

governor. The Watt governor (Figure 1) employs a spindle mounted on the

flywheel and angle arms attached to the spindle. As the flywheel moves

faster, the arms move outwards. A linkage system connects these arms to

a steam valve in such a way as to begin to close the valve when the the

arms move out (as a result of the flywheel turning faster) and to open

the steam valve as the arms drop. Thus, when the flywheel starts to turn

too fast, the steam flow is reduced so as to slow it down, and when it

starts to turn too slowly, the steam flow is increased so as to speed it

up. The Watt governor is contrasted with a hypothetical computational governor

which might be implemented in a traditional computer program that carried

out the following operations:

2. Compare the actual speed against the desired speed

3. If there is no discrepancy, return to step 1. Otherwise,

a. measure the current steam pressure;

b. calculate the desired alteration in steam pressure;

c. calculate the necessary throttle valve adjustment.

4. Make the throttle valve adjustment.

Return to step 1. (van Gelder, 1995, p. 348)

However, a careful examination of the Watt governor reveals that it is homuncular in just the sense required for a mechanistic explanation. The Watt governor has a variety of different parts: the flywheel, the spindle and angle arms, and a linkage mechanism connected to a valve controlling the steam. As Figure 1 makes clear, these components in fact do different jobs: the valve controls the amount of steam driving the flywheel, the flywheel turns with a specific speed, and the angle arms move out with centrifugal force. This becomes very clear if we attempt to explain how the Watt governor works and why each of the parts are needed--we explain their function in terms of what task they perform for the overall system. For example, we explain the need for the angle arms in terms of the need to transform the information about the speed of the flywheel into a format that can be used to regulate the opening of the steam valve by a linkage mechanism.

It is true, and an important point, that the tasks performed by the parts of the Watt governor do not map onto the operations of the homunculi in the computational governor. Moreover, the vocabulary for describing the parts is that appropriate for the task they do: angle arms move in an out according to centrifugal force, etc. We do not directly describe the activity of the parts in terms of the overall activity of the governor in regulating speed or in terms of tasks that would be identified in an a priori task analysis. We shift vocabularies from one describing the overall steam engine's behavior to one describing what the parts do. There is then an extra step to connect these processes to the needs of the overall system--we have to note that the angle of the arms carries information about the speed of the flywheel in a format appropriate to the mechanism that will open and shut the valve on the steam engine.

This important point applies as well to the brain--in understanding

the brain we need to consider what the component systems in the brain themselves

do, not impose an a priori task analysis drawn from the cognitive activities

we do with our brain. Terrence Deacon (1997) makes this clear when he refers

to seeking a neural logic, not linguistic logic when we try to explain

how language operations are performed in the brain:

3. van Orden's Argument for Rejecting Doubled Dissociations and Subtraction

Another line of attack on mechanistic decomposition on behalf of a dynamical systems approach is developed by van Orden et al. (in preparation). The main target of their critique is the use of double-dissociation studies to decompose cognitive processes into different processing streams (e.g., a lexical and a sub-lexical route in reading), but they also extend the critique to the use of substraction methodologies in neuroimaging so to identify brain areas involved in cognitive performance (van Orden and Papp, 1997). In developing the critique, van Orden et al. draw a contrast between approaches that assume single causes for individual cognitive activities (double-dissociation studies and neuroimaging), and those that assume reciprocal causality (dynamical models). There are some important methodological points raised in this critique of double dissociation. One is that double dissociation studies assume separate causes for separate effects (if two effects are separate, then their causes are separate causes). The development of connectionist models that generate double-dissociations from single connectionist networks (Plaut et al., 1996) effectively establishes that a double dissociation does not alone demonstrate the existence of totally separate processing pathways. A second is that the search for pure cases of double-dissociations (one that shows a deficit in one behavior and no deficits in the other, and one that shows the reverse pattern) can be non-terminating and thus risks giving rise to a degenerating research program.

What is noteworthy, and problematic about van Orden et al.'s approach, though, is that they set up an overly strong version of the decompositional or modular perspective. They claim that

CONDITION 1: Each rule's input is strictly independent from its output. . . .

CONDITION 2a: The respective sets of rules relate nonoverlapping sets of inputs to nonoverlapping sets of outputs. . . .

OR

CONDITION 2b: They relate the same inputs to nonoverlapping sets of outputs. . . .

OR

CONDITION 2c: They relate nonoverlapping sets of inputs to the same outputs. . ." (p. 40)

We can now identify the problems in van Orden et al.'s critique. The problem stems from the two extremes put forward, and the failure to consider more intermediate cases. Thus, on the one hand their account of decomposition or modularity only considers what I earlier labeled simple or complex localizations, not integrated systems. Accordingly, it ignores more sophisticated mechanistic models which allow for extensive interaction between the separate processing components. In integrated systems, components still carry out specific tasks, but they do so in a highly interactive fashion. A powerful example of a model of an integrated system is Felleman and van Essen's (1991) model of visual processing. These researchers identify 32 different brain regions in the visual processing system of the macaque and roughly 300 sets of connections between these areas. Thus, these regions are highly interconnected, with approximately one-third of the possible interconnections actually realized (the vast majority involving bidirectional connections). Despite the fact that this system is highly interconnected, the different brain regions are thought to carry out different tasks (as determined by single cell recording and more recently neuroimaging). Thus, different areas process color , others shape, and movement information. The interactions in this system make it a natural subject for a dynamical analysis, but it is also a case of a mechanistic system. Thus, even without single causes or isolated pathways, one can have a form of weak modularity in which components make different contributions even while sharing information. (One point that should be noted about recurrent connections in the brain is that they arise from different layers of cortex and project to different layers of cortex than do feedforward connections. This provides just one among several reasons for thinking they perform different tasks. This do not merely function to achieve holistic integration.)

A similar objection can be raised to the perspective on dynamics that van Orden et al. advocate. The strong holist claims they advance stems from assuming a system that is totally interconnected. Increasingly, however, neural network researchers are moving away from totally interconnected networks to more modular networks in which specific processing components are primarily responsible for processing different kinds of information. In one clear example Jacobs, Jordan, and Barto (1991) developed a network system comprising three separate feedforward networks and a gating network that determines which of the competing networks responds to a particular input. They show that in a simple version of a task requiring identification of either the location or the identity of an input letter, the system assigned one network without hidden units to the location task and another network with hidden units to the identification task. This distribution of tasks arose during learning; it was not engineered. Not only does a modular design seem to give greater efficiency than a nonmodular system, but it also avoided serious known liabilities of network systems such as catastrophic interference. Thus, even within connectionist frameworks that generally emphasize a high degree of integration, there is evidence of task decomposition. (This can even be found in connectionist networks that challenge the claims of multiple routes based on double-dissociations: these networks can mimic the effects of double-dissociation precisely because different parts of the networks are carrying out different processing tasks and so create different deficits when lesioned.)

4. Using Dynamical Tools in a Decompositional Research Program

My strategy has been to question the dynamicist claim that a dynamical approach is incompatible with a mechanistic conception of a system which emphasizes decomposition and localization. In this last section, I briefly point to the kind of problems that arise in mechanistic systems that make it natural to invoke dynamical tools within a mechanistic framework. To do this I will return to the case of language processing in the brain. Earlier I identified Broca's approach to language processing as constituting a simple localization and Wernicke's project as pointing to a complex localization. Recently Deacon (1997) has provided a perspective that suggests a much more integrated model. Above I quoted his suggestion that we view language as grafted onto a primate brain; in following this path Deacon appeals to evidence that electrical stimulation used to inject noise into different parts of the frontal, parietal, and temporal cortex interrupts various aspects of language processing. From this he argues that a wide range of brain areas are involved. Two points, though, are especially noteworthy: stimulation in different areas produces different interruptions, and there is a pattern of concentric regions that produce the same kinds of disruptions. Thus, stimulation to areas closest to motor cortex interfere with motor activities of speech, but areas both anterior and posterior to this begin to affect syntactic and semantic processes. Deacon advances an intriguing framework to account for this and other evidence. He hypothesizes that the areas that affect the same activity are joined in recurrent connections, possibly creating different attractor systems. As the network settles, it passes information on to areas closer to the actual motor control involved in speech. The areas closer to each other can settle into attractors much more quickly than those that are more separated, and this difference in timing may have functional significance.

Slow neural signal transmission can also become a limiting factor in the brain, so that very rapid processes are best handled within a very localized region, whereas the accumulation of information over time might better be served by a more distributed and redundant organization that resists degradation. So it makes sense that for each modality, it might be advantageous to segregate its fast from its slow processes (1997, p. 291).

Modeling such systems with highly recurrent internal connections and slow feedforward to other processing components is the precise sort of task for which dynamical tools are required. And they are required in the course of modeling a system with components carrying out different activities--a mechanistic system.

5. Conclusions

Dynamical approaches are not incompatible with mechanistic explanations developed through decomposition and localization, but in fact have an important role to play within mechanistic research. The appearance of incompatibility results from an excessive holism in the characterization of dynamical models and a failure to recognize the sort of integrated mechanisms that result from decomposition and localization.

Bechtel, W. (in press). Representations and cognitive explanations: Assessing the dynamicist's challenge in cognitive science. Cognitive Science.

Bechtel, W. & Richardson, R. C. (1993). Discovering complexity: Decomposition and localization as strategies in scientific research. Princeton: Princeton University Press.

Broca, P. (1861). Remarques sur le Siêge de la Faculté Suivies d' une Observation d' Aphémie. Bulletins de la Société Anatomique de Paris, 6, 343-57.

Deacon, T. (1997). The symbolic species. New York: Norton.

Dennett, D. C. (1977). Brainstorms. Cambridge, MA: MIT Press.

Felleman, D. J. & van Essen, D. C. (1991). Distributed hierarchical processing in the primate cerebral cortex. Cerebral Cortex, 1, 1-47.

Fodor, J. (1983). Modularity of mind. Cambridge, MA: MIT Press.

Jacobs, R. A., Jordan, M. I., & Barto, A. G. (1991). Task decomposition through competition in a modular connectionist architecture: The what and where vision tasks. Cognitive Science, 15, 219-250.

Plaut, D. C., McClelland, J. L., Seidenberg, M. S., & Patterson, K. E. (1996). Understanding normal and impaired word reading: Computational principles in quasi-regular domains. Psychological Review, 103, 56-115.

Port, R.& van Gelder, T. (eds.) (1995). Mind as motion. Cambridge, MA: MIT Press.

van Gelder, T. (1995). What might cognition be, if not computation. The Journal of Philosophy, 92, 345-381.

van Orden, C. G., Pennington, B. F., & Stone, G. O. (in preparation). What do double dissociations prove? Inductive methods and theory in psychology.

van Orden, C. G. & Papp, K. R. (1997). Functional neuroimages fail to discover pieces of mind in the parts of the brain. Philosophy of Science, 63.

Wernicke, C. (1874). Der Aphasiche Symptomcomplex: Eine Psychologische Studie auf Anatomischer Basis. Breslau: Cohen and Weigert.

Wiener, N. (1948). Cybernetics: Or, control and communication in the animal machine. New York: Wiley.